COMMENT

Evaluation of research in India – are we doing it right?

Muthu Madhan, Subbiah Gunasekaran, Subbiah Arunachalam*

Published online: March 23, 2018

DOI: https://doi.org/10.20529/IJME.2018.024

Abstract

The evaluation of performance in scientific research at any level – whether at the individual, institutional, research council or country level – is not easy. Traditionally, research evaluation at the individual and institutional levels has depended largely on peer opinion, but with the rapid growth of science over the last century and the availability of databases and scientometric techniques, quantitative indicators have gained importance. Both peer review and metrics are subject to flaws, more so in India because of the way they are used. Government agencies, funding bodies and academic and research institutions in India suffer from the impact factor and h-index syndrome. The uninformed use of indicators such as average and cumulative impact factors and the arbitrary criteria stipulated by agencies such as the University Grants Commission, Indian Council of Medical Research and the Medical Council of India for selection and promotion of faculty have made it difficult to distinguish good science from the bad and the indifferent. The exaggerated importance given by these agencies to the number of publications, irrespective of what they report, has led to an ethical crisis in scholarly communication and the reward system in science. These agencies seem to be unconcerned about the proliferation of predatory journals and conferences. After giving examples of the bizarre use of indicators and arbitrary recruitment and evaluation practices in India, we summarise the merits of peer review and quantitative indicators and the evaluation practices followed elsewhere.

This paper looks critically at two issues that characterise Indian science, viz (i) the misuse of metrics, particularly impact factor (IF) and h-index, in assessing individual researchers and institutions, and (ii) poor research evaluation practices. As the past performance of individual researchers and the funds they seek and obtain for subsequent projects are inextricably intertwined, such misuse of metrics is prevalent in project selection and funding as well.

This study is based on facts gathered from publicly available sources such as the websites of organisations and the literature. After explaining the meaning of impact factor and h-index and how not to use them, we give many examples of misuse in reports by Indian funding and regulatory agencies. In the next two sections we give examples of the arbitrariness of the criteria and indicators used by the agencies for the selection and promotion of faculty, selection of research fellows, and funding. We follow this up with the evaluation practices in use elsewhere. If we have cited only a few examples relating to medicine, it is for two reasons: one, medicine forms only a small part of the Indian academic and research enterprise; and two, what applies to research and higher education in other areas applies to medicine as well.

Misuse of metrics

The regulatory and funding agencies give too much importance to the number of papers published and use indicators such as average IF, cumulative IF and IF aggregatein the selection of researchers for awards, the selection and promotion of faculty, awarding fellowships to students and grants to departments and institutions, and thus contribute to the lowering of standards of academic evaluation, scholarly communication, and the country’s research enterprise.

Impact factors, provided by Clarivate Analytics in their Journal Citation Reports (JCR), are applicable to journals and not to individual articles published in the journals. Nor is there such a thing as impact factors of individuals or institutions. One cannot attribute the IF of a journal to a paper published in that journal, as not all papers are cited the same number of times; and the variation could be of two to three orders of magnitude. This metonymic fallacy is the root cause of many ills.

Let us consider the 860 articles and reviews that Nature published in 2013, for example. These have been cited 99,539 times as seen from Web of Science on January 20, 2017; about 160 papers (<19%) account for half of these citations, with the top 1% contributing nearly 12% of all citations, the top 10% contributing 37% of citations, and the bottom one percentile contributing 0.09% of citations. While hardly any paper published in Nature or Science goes uncited, the same is not true of most other journals. A substantial proportion of the papers indexed in Web ofScience over a period of more than a hundred years has not been cited at all and only about 0.5% has been cited more than 200 times (1). Of the six million papers published globally between 2006 and 2013, more than a fifth (21%) has not been cited (2). As Lehman et al (3) have pointed out, the journal literature is made up of “a small number of active, highly cited papers embedded in a sea of inactive and uncited papers”. Also, papers in different fields get cited to different extents. And, as the tremendously skewed distribution of impact factors of journals indicates, only a minority of journals receives the majority of citations.

A Web of Science search for citations to papers published during 2006–2013 made on February 20, 2017, revealed that there is a wide variation in the number of articles not cited among different fields. To cite examples of some fields, the percentage shares of articles and reviews that have not yet been cited even once are: Immunology (2.2%), Neuroscience (3%), Nanoscience and Nanotechnology (4%), Geosciences (5.6%), Surgery (7%), Spectroscopy (8.6%) and Mathematics (16%).

The regulatory and funding agencies lay emphasis on the h-index (4), which is based on the number of papers published by an individual and the number of times they are cited. The h-index of an author is 10 if at least 10 of his/her papers have each received not less than 10 citations, irrespective of the total number of papers he/she has published, and it is arrived at by arranging papers in descending order of the number of times cited. The index does not take into account the actual number of citations received by each paper even if these are far in excess of the number equivalent to the h-index and can thus lead to misleading conclusions.

According to the Joint Committee on Quantitative Assessment of Research formed by three international Mathematics institutions (5), “Citation-based statistics can play a role in the assessment of research, provided they are used properly, interpreted with caution, and make up only part of the process. Citations provide information about journals, papers, and people. We don’t want to hide that information; we want to illuminate it.” The committee has shown that using the h-index in assessing individual researchers and institutions is naïve (5). The Stanford University chemist Zare believes that the h-index is a poor measure in judging researchers early in their career, and it is more a trailing, rather than a leading, indicator of professional success (6).

As early as 1963, when the Science Citation Index (SCI) was released, Garfield (7) cautioned against “the possible promiscuous and careless use of quantitative citation data for sociological evaluations, including personnel and fellowship selection”. He was worried that “in the wrong hands it might be abused” (8). Wilsdon et al have also drawn attention to the pitfalls of the “blunt use of metrics such as journal impact factors, h-indices and grant income targets” (9).

Regrettably, Indian agencies are not only using impact factors and the h-index the wrong way, but also seem to have institutionalised such misuse. Many researchers and academic and research institutions are under the spell of impact factors and the h-index, and even peer-review committees are blindly using these metrics to rank scientists and institutions without understanding their limitations, which prompted Balaram to comment, “Scientists, as a community, often worry about bad science; they might do well to ask hard questions about bad scientometrics.” (10) To be fair, such misuse of metrics is not unique to India.

The ranking of universities by Times Higher Education, Quacquarelli Symonds, Academic Ranking of World Universities and at least half a dozen other agencies has only exacerbated many a vice chancellor’s/director’s greed to improve their institution’s rating by any means, ethics be damned. The advent of the National Institutional Ranking Framework (NIRF), an initiative of the Ministry of Human Resource Development (MHRD), has brought many institutions that would not have found a place in international rankings into the ranking game.

Of late, higher educational institutions in India including the Indian Institute of Management (11), Bengaluru, have started to give monetary rewards to individual researchers who publish papers in journals with a high impact factor. Some institutions have extended this practice to the presentation of papers in conferences, writing of books, and obtaining of grants (12).

Reports on science and technology in India

The regulatory and funding agencies in India use the IF and h-index in bizarre ways. As early as 1998, an editorial in Current Science commented: “Citation counts and journal impact factors were gaining importance in discussions on science and scientists in committee rooms across the country” (13). In another editorial, the Current Science editor lamented the use of poorly conceived indicators such as the “average impact factor” “for assessing science and scientists” (14). A progress report on science in India commissioned by the Principal Scientific Advisor to the Government of India published in 2005 used the average IF to compare the impact of Indian research published in foreign and local journals, the impact of work done in different institutions, the impact of contributions made to different fields, and to compare India’s performance with that of other countries (15).

Since then there have been five other reports on science and technology in India, two of them by British think tanks and three commissioned by the Department of Science and Technology (DST), Government of India.

- In 2007, Demos brought out an engaging account of India’s Science, Technology and Innovation (STI) system (16) with insights gleaned from conversations with a cross-section of scientists, policy-makers and businessmen.

- In 2012, Nesta brought out a report (17) on the changing strengths of India’s research and innovation ecosystem and its potential for frugal innovation.

- Evidence, a unit of Thomson Reuters, prepared a report on India’s research output (18) for the DST in 2012.

- Phase 2 of the above-mentioned report (2015) carried tables comparing India’s average impact factor for each field with that of many other countries. However, Thomson Reuters included the following statement: “Journal Impact Factor is not typically used as the metric for assessment of academic impact of research work” (19).

- The DST had also commissioned a report by Elsevier’s Analytic Services based on Scopus data in 2016 (20).

We wonder why so many bibliometric projects were carried out to gather the same kind of data and insights. Also, why should one use journal impact factors instead of actual citation data in a study covering long periods? Impact factors are based on citations within the first two years or less, and virtually in all cases, the number of citations to articles published in a journal drops steeply after the initial two or three years (21).

Indicators used by different agencies

Department of Science and Technology

The Department of Science and Technology (DST) has claimed that a budget support of Rs 1.3 million per scientist provided in 2007 led to an IF aggregateof 6.6 per Rs 10 million budget support (22). While there is a positive relationship between research funding and knowledge production (measured by the number of publications and citations) (23), the following three issues must be considered:

- Aggregating the impact factors of the journals in which an individual’s or institution’s papers are published does not lead to a meaningful quantity.

- The impact factor of a journal is not determined by DST-funded papers alone; it is possible that DST-funded research might even bring down the IF of a journal.

- Researchers may get funding for several projects at the same time from different sources. Therefore, identifying the incremental impact of specific research grants is very difficult.

Institutions under the DST such as the Indian Association for the Cultivation of Science (IACS) and the SN Bose National Centre for Basic Sciences set targets for the number of papers to be published, citationsto those papers, citations per rupee invested, cumulative impact factor and institutional h-index in the 12th Five-Year Plan (2012–2017) (22). To aim to publish a very large number of papers, earn a large number of citations and score high on the h-index even before conducting the research is not the right way to do research. Instead institutions may do well to concentrate on the quality of research, originality and creativity. Besides, while writing papers may be in one’s hands, getting them accepted in journals is not, let alone ensuring a certain number of citations and predicting the h-index that such citations would lead to.

The DST also requires applicants for its prestigious Swarnajayanthi award to provide impact factors of their publications. It believes that the h-index is a measure of “both the scientific productivity and the apparent scientific impact of a researcher” and that the index can be “applied to judge the impact and productivity of a group of researchers at a department or university” (24). It has started assigning monetary value for citations. It awards incentive grants of up to Rs 300 million to universities on the basis of the h-index calculated by using citation data from Scopus (24).

This seems illogical, as a university’s h-index may be largely dependent on the work of a small number of individuals or departments. For example, Jadavpur University’s strength lies predominantly in the fields of Computer Science and Automation, while Annamalai University is known for research in Chemistry and Banaras Hindu University for Chemistry, Materials and Metallurgy, and Physics. Is it justified to allocate funds to a university on the basis of the citations received by a few researchers in one or two departments? Or, should the bulk of the funds be allocated to the performing departments?

Department of Biotechnology

With regard to the use of metrics, the Department of Biotechnology (DBT) seems to follow an ambivalent policy. It does not use journal impact factors in programmes that it conducts in collaboration with international agencies such as the Wellcome Trust and the European Molecular Biology Organisation (EMBO). However, when it comes to its own programmes, it insists on getting impact factor details from researchers applying for grants and fellowships. The Wellcome Trust does not use impact factors or other numeric indices to judge the quality of work; it depends on multi-stage peer review and a final in-person interview. As an associate member of EMBO, the DBT calls for proposals for “EMBO Young Investigators”, wherein the applicants are advised “not to use journal-based metrics such as impact factor during the assessment process”. Indeed, the applicants are asked NOT to include these in their list of publications (25). In their joint Open Access Policy, the DST and DBT have stated that “DBT and DST do not recommend the use of journal impact factors either as a surrogate measure of the quality of individual research articles to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions” (26).

However, the DBT considers it a major achievement that about 400 papers published by researchers funded by it since 2006 had an average IFof 4–5 (27) and uses cumulative IF as a criterion in the selection of candidates for the Ramalingaswami Re-entry Fellowship, the Tata Innovation Fellowship, the Innovative Young Biotechnologist Award, and the National Bioscience Awards for Career Development, and in its programme to promote research excellence in the North-East Region.

Indian Council of Medical Research

A committee that evaluated the work of the Indian Council of Medical Research (ICMR) in 2014 thought it important to report that the more than 2800 research papers published by ICMR institutes had an average IF of 2.86, and that more than 1100 publications from extramural research had an average IFof 3.28 (28). The ICMR routinely uses average IF as a measure of performance of its laboratories.

Council of Scientific and Industrial Research

The Council of Scientific and Industrial Research (CSIR) has used four different indicators, viz (i) the average IF; (ii) the impact energy, which is the sum of the squares of impact factors of journals in which papers are published; (iii) the energy index (C2/P where P is the number of papers and C is the total number of citations) calculated on the basis of papers in the target window of the preceding five years and citations received in the census year; and (iv) the number of papers published per scientist in each laboratory (29, 30).

University Grants Commission

The University Grants Commission (UGC) uses the cumulative IFas the major criterion for granting Mid-Career Awards and Basic Science Research Fellowships (BSR) under its Faculty Research Promotion Scheme. The cumulative IF of the papers published by the applicant should be ≥ 30 for the Mid- Career Award and ≥ 50 for the BSR Fellowship (31). From 2017, the UGC also started demanding that institutions seeking grants provide the cumulative IF and h-index for papers published in the preceding five years at both the individual and institutional levels.

National Assessment and Accreditation Council

The National Assessment and Accreditation Council’s (NAAC) online form that institutions use to provide data (32) asks for, among other things:

- “Number of citation index – range / average” – what this means is not clear.

- “Number of impact factor – range / average” under publications of departments – we presume what is expected here is the range and average IF of journals in which the papers are published; while one can report the range of IF, the average IF does not mean anything.

- “Bibliometrics of the publications during the last five years based on average citation index …” – this is defined as the average number of citations per paper indexed in any one of the three databases, viz Scopus, Web of Science and Indian Citation Index, in the previous five years. This kind of averaging does not make sense. Moreover, it seems that the NAAC is indirectly forcing all institutions seeking accreditation to subscribe to all the three citation databases. Can it not mandate all institutions to set up interoperable institutional repositories from where it could harvest the bibliographic data of all papers and then have its staff or an outsourcing agency track the citations to those papers? Would that not be a much less expensive and far more elegant solution? The NAAC also requires the “number of research papers per teacher in the journals notified on the UGC website during the last five years.” Unfortunately, many have questioned the credibility of many journals in the UGC list as discussed in a later section of this paper, and it is surprising that NAAC chose the UGC list as a gold standard.

- The h-index for each paper – this is patently absurd.

Can recruitment and evaluation practices be arbitrary?

University Grants Commission

As per the UGC (Minimum Qualifications for Appointment of Teachers and other Academic Staff in Universities and Colleges and Measures for the Maintenance of Standards in Higher Education) Regulations 2013 (2nd Amendment), an aspiring teacher in a university or college will be evaluated on the basis of her Academic Performance Indicator (API) score, which is based on her contribution to teaching, research publications, bringing research projects, administration, etc. As far as research is concerned, one gets 15 points for every paper one publishes in any refereed journal and 10 for every paper published in a “non-refereed (reputed) journal.” In July 2016, the UGC increased the score for publication in refereed journals to 25 through an amendment (33). The API score for papers in refereed journals would be augmented as follows: (i) indexed journals – by 5 points; (ii) papers with IF between 1 and 2 – by 10 points; (iii) papers with IF between 2 and 5 – by 15 points; (iv) papers with IF between 5 and 10 – by 25 points. (34) UGC does not define what it means by “indexed journals”. We presume it means journals indexed in databases such as Web of Science, Scopus and PubMed. Does this mean that papers published in journals like the Economic and Political Weekly, Indian Journal of Medical Ethics, and Leonardo, which do not have an IF, are worth nothing?

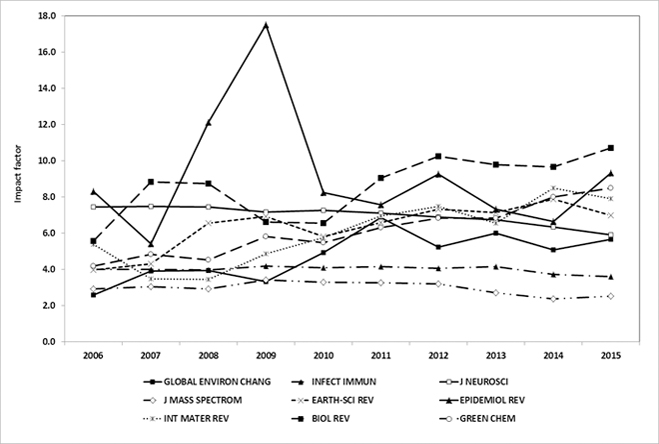

The calculation of the API on the basis of IF is inherently defective for the simple reason that the IF range varies from field to field. The IF of mathematics, agriculture and social science journals are usually low and those of biomedical journals high (35). Also, the IF of a journal varies from year to year (Fig 1). The IF of 55% of journals increased in 2013, and that of 45% decreased (36). The IF of 49 journals changed by 3.0 or more between 2013 and 2014 (37). In some cases, the change is drastic. The IF of Acta Crystallographica Section A, for example, is around 1.5–2.5 most of the time, but it rose to 49.737 in 2009 and 54.066 in 2010 as seen from JCR – more than 20 times its usual value – because a 2008 paper reviewing the development of the computer system SHELX (38) was cited many thousand times in a short span of time. As one would expect, in 2011 the IF of this journal dropped to its usual level (1.728).

Let us see how using journal IF affects the fortunes of a faculty member. Take the hypothetical case of a journal whose IF is around 2.000, say 1.999 or 2.001. No single paper or author is responsible for these numbers. If a couple of papers receive a few more citations than the average, the IF will be 2.001 or more and the candidate will get a higher rating; if a couple of papers receive less than the average number of citations the IF will fall below 2.000 for the same paper reporting the same work. Some journals, especially those published in developing and emerging countries, are indexed by JCR in some years but dropped later (39); eg the Asian-Australasian Journal of Animal Sciences and Cereal Research Communications were delisted in 2008 when they showed an unusually large increase in self-citations and consequently increased IF but reinstated in 2010. Some papers published in high-IF journals do not get cited very frequently, if that is the reason an agency wants to reward the author. On the other hand, some papers published in low-IF journals are cited often within a short span of time. We found from InCites that 56 papers published during 2014–16 in 49 journals in the IF range 0.972–2.523 (JCR 2016) were cited at least 100 times as on November 2, 2017. Thus, depending on the journal IF for faculty evaluation may not be wise.

The UGC has also set rules for the allocation of API points to individual authors of multi-authored papers: “The first and principal / corresponding author / supervisor / mentor would share equally 70% of the total points and the remaining 30% would be shared equally by all other authors” (34). This clause effectively reduces the credit that should be given to the research student. Allocating differential credit to authors on the basis of their position in the byline can lead to problems. The authors of a paper would compete to be the first or corresponding author, destroying the spirit of collaboration. Besides, some papers carry a footnote saying that all authors have contributed equally; in some papers the names of the authors are listed in alphabetical order; while in others the order of names is by rotation. In addition, there are instances of tossing a coin to decide on the order of authors who contribute equally. Some papers are by a few authors, while others may have contributions from a few thousand authors (eg in high energy physics, astronomy and astrophysics). To cite an example of the latter, the article “Combined Measurement of the Higgs Boson Mass in pp Collisions at root s=7 and 8 TeV with the ATLAS and CMS Experiments” in Physical Review Letters had 5126 authors. Currently, some mathematicians from around the world have joined hands under the name Polymath to solve problems. Polymath is a crowdsourced project initiated by Tim Gowers of the University of Cambridge in 2009 and has so far published three papers, while nine more are in the pipeline.

There are many so-called “refereed” and “reputed” journals in India that are substandard and predatory, so much so that India is considered to be the world’s capital for predatory journals. The publishers of these journals seduce researchers with offers of membership in editorial boards and at times add the names of accomplished researchers to their editorial boards without their consent. They also host dubious conferences and collect large sums from authors of papers. To keep such journals out of the evaluation process, the UGC decided to appoint a committee of experts to prepare a master list of journals. This Committee released a list of 38,653 journals (see UGC notification No.F.1-2/2016 (PS) Amendments dated 10 January 2017). According to Curry, this move is tantamount to an abdication of responsibility by the UGC in evaluating the work of Indian researchers (40). Ramani and Prasad have found 111 predatory journals in the UGC list (41). Pushkar suspects that many people might already have become teachers and deans in colleges and universities, and even vice chancellors, on the strength of substandard papers published in such dubious journals (42). Even researchers in well-known institutions have published in such journals (43).

Following the UGC, the All India Council for Technical Education has also started using API scores to evaluate aspiring teachers in universities and colleges (44).

Ministry of Human Resource Development

The MHRD has recently introduced a “credit point” system for the promotion of faculty in the National Institutes of Technology (NIT) (45). Points can be acquired through any one of 22 ways, including being a dean or head of a department or being the first/corresponding author in a research paper published in a journal indexed in Scopus or Web of Science, or performing non-academic functions such as being a hostel warden or vigilance officer (45). As per the new regulations, no credit will accrue for publishing articles by paying article-processing charges (APC). According to the additional secretary for technical education (now secretary, higher education) at the MHRD (46), “non-consideration of publications in ‘paid journals’ for career advancement is a standard practice in IITs and other premium institutions, not only NITs.” This policy is commendable.

Medical Council of India

As per the latest version of the “Minimum qualifications of teachers in medical institutions” (47), candidates for professors must have a minimum of four accepted/published research papers in an indexed/national journal as first or second author of which at least two should have been published while he/she was an associate professors; candidates for associate professors must have a minimum of two accepted/published research papers in an indexed/national journal as first or second author. Unfortunately, the Medical Council of India (MCI) has left one to guess what it means by “indexed journals”, giving legitimacy to many predatory journals indexed in Index Copernicus, which many consider to be of doubtful veracity. A group of medical journal editors had advised against the inclusion of Index Copernicus as a standard indexing service (48), but the MCI did not heed the advice. The net result is the mushrooming of predatory journals claiming to be recognised by the MCI and indexed in the Index Copernicus, and to have an IF far above those of standard journals in the same field. Many faculty members in medical colleges across the country, who find peer review an insurmountable barrier, find it easy to publish their papers in these journals (49), often using taxpayers’ money to pay APC, and meet the requirement for promotion, never mind if ethics is jettisoned along the way.

Indian Council of Medical Research

Like the UGC, the ICMR is also assigning credits for publications in “indexed journals” and these credits depend on the IF of the journals. In addition, an author gets credits for the number of times her publications are cited. The credits an author gets for a paper depends on her position in the byline as well (50).

National Academy of Agricultural Sciences

The National Academy of Agricultural Services (NAAS) has been following an unacceptable practice in the selection of fellows. Like many other agencies, it calculates the cumulative IF, but as many of the journals in which agricultural researchers publish are not indexed in the Web of Science and hence, not assigned an IF, NAAS assigns them IFs on its own. What is more, it has arbitrarily capped the IF of journals indexed in the Web of Science at 20 even if JCR has assigned a much higher value (51). The absurdity of this step can be seen by comparing the NAAS-assigned IF of 20 with the actual 2016 IF of Nature (>40), Science (>37) and Cell (>30) assigned by JCR. Similarly, the Annual Review of Plant Biology had an IF of 18.712 in 2007, which rose to 28.415 in 2010. Yet, the NAAS rating of this journal recorded a decrease of four points between the two years. This highlights the need for transparency in the evaluation process. The Faculty of Agriculture, Banaras Hindu University uses only the much-flawed NAAS journal ratings for the selection of faculty (52).

If the rating of journals by the NAAS is arbitrary, the criteria adopted by the Indian Council of Agricultural Research (ICAR) for the recruitment and promotion of researchers and teachers is even more so. If a journal has not been assigned a rating by the NAAS, it is rated arbitrarily by a screening committee empowered by the ICAR (53).

Clearly such policies not only help breed poor scholarship, but also encourage predatory and substandard journals. The scenario is becoming so bad that India could even apply for the “Bad Metrics” award! (See: https://responsiblemetrics.org/bad-metrics/).

In recent years, academic social networks such as ResearchGate have become popular among researchers around the world, and many researchers flaunt their ResearchGate score as some journals flaunt their IF on the cover page. While ResearchGate undoubtedly helps one follow the work of peers and share ideas (54), the ResearchGate score, which appears to be based on the number of downloads and views, number of questions asked and answered, and number of researchers one follows and one is followed by, is considered a bad metric (55).

Discussion

Research is a multifaceted enterprise undertaken in different kinds of institutions by different types of researchers. In addition, there is a large variation in publishing and citing practices in different fields. Given such diversity, it is unrealistic to expect to reduce the evaluation of research to simple measures such as the number of papers, journal impact factors and author h-indices. Unfortunately, we have allowed such measures to influence our decisions.

Consider Peter Higgs, who published just 27 papers in a career spanning 57 years (See: http://www.ph.ed.ac.uk/higgs/peter-higgs), with the interval between two papers often being five years or more. Were he to be judged by the UGC’s standards during one of the several 5-year periods during which he did not publish a paper, he would have been rated a poor performer! Yet the world honoured him with a Nobel Prize. Ed Lewis, the 1995 physiology/medicine Nobel laureate, was another rare and irregular publisher with a very low h-index (56). Laying undue emphasis on number of publications and bibliometric measures might lead scientists to write several smaller papers than one (or a few) substantive paper(s) (23). As Bruce Alberts says, the automated evaluation of a researcher’s quality will lead to “a strong disincentive to pursue risky and potentially groundbreaking work, because it takes years to create a new approach in a new experimental context, during which no publications should be expected” (57: p 13).

In addition, any evaluation process based on metrics is liable to gaming. When the number of papers published is given weightage, the tendency is to publish as many papers as possible without regard for the quality of papers. This is what happened with the Research Excellence Framework (REF) exercise in the UK. The numbers of papers published before and after the REF deadline of 2007 differed by more than 35% and the papers published during the year preceding the deadline received 12% fewer citations (58).

Many articles have been written on the misuse of IF. The persistent misuse of IF in research assessment led scientists and editors to formulate the Declaration on Research Assessment (DORA) (59) which recognises the need for eliminating the use of journal-based metrics, such as journal IF, in funding, appointment, and promotion considerations and assessing research, and recommends the use of article-level metrics instead.

As early as 1983, Garfield said that citation analysis was not everything and that it “should be treated as only one more, though distinctive, indicator of the candidate’s influence” (60). In his view, it helps to increase objectivity and the depth of analysis. He had drawn attention to the flaws in peer review, quoting the experiences and views of many (60). Sociologist Merton had pointed out that faculty evaluation letters could be tricky since there was no methodical way of assessing and comparing the estimates provided by different evaluators as their personal scales of judgement could vary widely (60).

Experience elsewhere

The selection and promotion of faculty worldwide is more or less based on metrics and peer- review, with their relative importance depending on the costs involved and the academic traditions. Lord Nicholas Stern had said in his report to the UK’s Minister of Universities and Science, “the UK and New Zealand rely close-to-uniquely on peer review, whilst Belgium, Denmark, Finland and Norway use bibliometrics for the assessment of research quality…..Internationally, there is a trend towards the use of bibliometrics and simple indicators” (61).

According to Zare (62), references are far more important than metrics when evaluating researchers for academic positions. A faculty member in the Stanford University Department of Chemistry is not judged simply by the tenured faculty of the department, but by the views of 10–15 experts outside the department, both national and international, on “whether the research of the candidate has changed the community’s view of the nature of chemistry in a positive way” (62). In contrast, Zare feels that in India, too much emphasis is placed on things such as the number of publications, h-index and the name order in the byline in assessing the value of an individual researcher (63). There are exceptions though. The National Centre for Biological Sciences, Bengaluru, gives great importance to peer review and the quality of research publications in the selection of faculty and in granting them tenure (64). The Indian Institute of Science also gives considerable weightage to peer opinion in the selection of new faculty and in granting tenure. As pointed out by the Research Evaluation Framework (REF) Review (61), systems that rely entirely on metrics are generally less expensive and less compliance-heavy than systems that use peer review. In India, with some 800 universities and 37,000 colleges, the cost of peer reviews for nationwide performance assessments would be prohibitive. However, the way forward would be to introduce the tenure system with the participation of external referees in all universities (as practised at the Indian Institute of Science and Stanford University).

Conclusion

Research has to be evaluated for rigour, originality and significance, and that cannot be done in a routine manner. Evaluation could become more meaningful with a shift in values from scientific productivity to scientific originality and creativity (65), but the funding and education systems seem to discourage originality and curiosity (65).

Research councils and universities need to undertake a radical reform in research evaluation (66). When hiring new faculty members, institutions ought to look not only at the publication record (or other metrics) but also at whether the candidate has really contributed something original and important in her field (65). When evaluating research proposals, originality and creativity should be considered rather than feasibility, and a greater emphasis should be laid on previous achievements rather than the proposed work (63). “The best predictor of the quality of science that a given scientist will produce in the near future is the quality of the scientific work accomplished during the preceding few years. It is rarely that a scientist who continually does excellent science suddenly produces uninteresting work, and conversely, someone producing dull science who suddenly moves into exciting research” (65).

There needs to be greater transparency and accountability. Even in the West, there is a perception that today academia suffers from centralised top-down management, increasingly bureaucratic procedures, teaching according to a prescribed formula, and research driven by assessment and performance targets (67). The NIRF exercise currently being promoted might lead to research driven by assessment and performance targets in the same way that the REF exercise in Britain did. One may go through such exercises so long as one does not take them very seriously and uses the results as a general starting point for in depth discussions based on details and not as the bottom line based on which decisions are made [personal communication from E D Jemmis].

Unfortunately, in India the process of accreditation of institutions has become corrupt over the years and academic autonomy has eroded (68). Indeed, even the appointments of vice chancellors and faculty are mired in corruption (69, 70, 71) and the choice of vice chancellors and directors of IITs and IISERs is “not left to academics themselves but directed by political calculations” (72). “If you can do that (demonetize), I don’t see any difficulty in (taking action) in higher education and research. The most important thing is to immediately do something about the regulatory bodies in higher education, the UGC, AICTE and NAAC,” says Balaram (68). According to him (68), a complete revamp and depoliticisation of the three crucial bodies is a must as there needs to be some level of professionalism in education.” He opines that unlike the NDA1 and UPA1 governments the current government appears to be “somewhat disinterested in the area of higher education and research”.

The blame does not lie with the tools, but the users in academia and the agencies that govern and oversee academic institutions and research. They are neither well-informed about how to use the tools, nor willing to listen to those who are. Given these circumstances, the answer to the question in the title cannot be anything but “No.”

*Note

The views expressed here are those of the authors and not of the institutions to which they belong.

Acknowledgements

We are grateful to Prof. Satyajit Mayor of the National Centre for Biological Sciences, Prof. Dipankar Chatterjee, Department of Biophysics, Indian Institute of Science, Prof. N V Joshi, Centre for Ecological Sciences, Indian Institute of Science, Prof. T A Abinandanan, Department of Materials Engineering, Indian Institute of Science, Prof. E D Jemmis, Department of Inorganic and Physical Chemistry, Indian Institute of Science, Prof. Vijaya Baskar of Madras Institute of Development Studies, Prof. Sundar Sarukkai, National Institute of Advanced Studies, and Mr. Vellesh Narayanan of i-Loads, Chennai, for their useful inputs. We are indebted to the two referees for their insightful comments.

References

- Garfield E. The history and meaning of the journal impact factor. JAMA. 2006;295(1):90-3.

- Medical Research Council UK. Citation impact of MRC papers. 2016 Apr 11 [cited 2017 May 7]. Available from: https://www.mrc.ac.uk/news/browse/citation-impact-of-mrc-papers/

- Lehmann S, Jackson AD, Lautrup B. Life, death and preferential attachment. Europhys Lett. 2005;69(2):298-303. doi: 10.1209/epl/i2004-10331-2 1.

- Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005 Nov;102(46):16569-72. doi: 10.1073/pnas.0507655102.

- Adler R, Ewing J, Taylor P. Citation statistics: a report from the International Mathematical Union (IMU) in cooperation with the International Council of Industrial and Applied Mathematics (ICIAM) and the Institute of Mathematical Statistics (IMS). Statist Sci. 2009;24(1):1-14. doi: 10.1214/09-STS285.

- Zare RN. Assessing academic researchers. Angew Chem Int Ed. 2012;51(30):7338-9. doi:10.1002/anie.201201011.

- Garfield E. Citation indexes in sociological and historical research. Am Doc. 1963 [cited 2017 May 7];14(4):289-91. Available from: http://garfield.library.upenn.edu/essays/V1p043y1962-73.pdf

- Garfield E. The agony and the ecstasy – The history and meaning of the journal impact factor. Paper presented at International Congress on Peer Review and Biomedical Publication, Chicago; 2005 Sep 16[cited 2017 May 7]. Available from: http://garfield.library.upenn.edu/papers/jifchicago2005.pdf

- Wilsdon J, Allen L, Belfiore E, Campbell P, Curry S, Hill S, Jones R, Kain R, Kerridge S, Thelwall M, Tinkler J, Viney I, Wouters P, Hill J, Johnson B. The metric tide: report of the independent review of the role of metrics in research assessment and management, July 8, 2015. doi: 10.13140/RG.2.1.4929.1363.

- Balaram P. Citations, impact indices and the fabric of science. Curr Sci. 2010;99(1):857-9.

- Balaram P. Citation counting and impact factors. Curr Sci. 1998;75(3):175.

- Anand S. Indian B-schools hope cash bonuses fuel research. The Wall Street Journal, June 13, 2011 [cited 2017 May 7]. Available from: https://blogs.wsj.com/indiarealtime/2011/06/13/indian-b-schools-hope-cash-bonuses-fuel-research/

- GMRIT, Incentive Policy for Research and Publications, [cited 2017 May 7]. Available from: http://www.gmrit.org/facilities-perks.pdf

- Balaram P. Who’s afraid of impact factors. Curr Sci. 1999;76(12):1519-20.

- Gupta BM, Dhawan SM. Measures of progress of science in India. An analysis of the publication output in science and technology. National Institute of Science, Technology and Development Studies (NISTADS); 2006 [cited 2017 May 7]. Available from: http://www.psa.gov.in/sites/default/files/11913286541_MPSI.pdf

- Bound K. India: the uneven innovator. Demos publications; 2007 [cited 2017 May 7]. Available from: https://www.demos.co.uk/files/India_Final.pdf

- Bound K, Thornton I. Our frugal future: lessons from India’s innovation system. Nesta, July 2012 [cited 2017 May 7]. Available from: http://www.nesta.org.uk/sites/default/files/our_frugal_future.pdf

- Bibliometric study of India’s scientific publication outputs during 2001-10: evidence for changing trends. Department of Science and Technology, Government of India, New Delhi; July 2012 [cited 2017 May 7]. Available from: http://www.nstmis-dst.org/pdf/Evidencesofchangingtrends.pdf

- Thomson Reuters and Department of Science and Technology. India’s research output and collaboration (2005-14): a bibliometric study (phase-II). Ministry of Science and Technology, Government of India, New Delhi; August 2015 [cited 2017 May 7]. Available from: http://nstmis-dst.org/PDF/Thomson.pdf

- Huggett S, Gurney T, Jumelet A. International comparative performance of India’s research base (2009-14): a bibliometric analysis; 2016 Apr [cited 2017 May 7]. Available from: http://nstmis-dst.org/PDF/Elsevier.pdf

- Cross JO. Impact factors – the basics. In: Anderson R (ed). The E-Resources Management Handbook. The Charleston Company [cited 2017 May 7]. Available from: https://www.uksg.org/sites/uksg.org/files/19-Cross-H76M463XL884HK78.pdf

- Department of Science and Technology. Working group report for the 12th Five-Year Plan (2012-17). Ministry of Science and Technology, Government of India, New Delhi; September 2011 [cited 2017 May 7]. Available from: http://www.dst.gov.in/sites/default/files/11-wg_dst2905-report_0.pdf

- Rosenbloom JL, Ginther TK, Juhl T, Heppert JA. The effects of research and development funding on scientific productivity: Academic chemistry, 1990-2009. PLoS One. 2015;10(9):e0138176. doi:10.1371/journal.pone.0138176.

- Department of Science and Technology, Government of India. Fund for improvement of S&T infrastructure [cited 2017 May 7]. Available from: http://www.fist-dst.org/html-flies/purse.htm

- European Molecular Biology Organisation. EMBO Young Investigator Programme – Application guidelines, January 20, 2017 [cited 2017 May 7]. Available from: http://embo.org/documents/YIP/application_guidelines.pdf

- Department of Biotechnology and Department of Science and Technology. DBT and DST Open Access Policy: Policy on open access to DBT and DST funded research. Ministry of Science and Technology, Government of India, New Delhi; December 12, 2014 [cited 2017 May 7]. Available from: http://www.dbtindia.nic.in/wp-content/uploads/APPROVED-OPEN-ACCESS-POLICY-DBTDST12.12.2014.pdf

- Department of Biotechnology. Major achievements since onset. Ministry of Science and Technology, Government of India, New Delhi; 2006 [cited 2017 May 7]. Available from: http://www.dbtindia.nic.in/program-medical-biotechnology/chronic-disease-biology/major-achievements/

- Tandon PN. Report of the high-power committee: evaluation of the ongoing research activities of Indian Council of Medical Research, 2014 [cited 2017 May 7]. Available from: http://icmr.nic.in/Publications/hpc/PDF/6.pdf

- Council of Scientific and Industrial Research, Government of India. Research papers, 2008 [cited 2017 May 7]. Available from: http://www.niscair.res.in/Downloadables/CSIR-Research-Papers-2008-Synopsis.pdf

- Council of Scientific and Industrial Research, Government of India. CSIR Annual Report 2013-2014 [cited 2017 May 7]. Available from: http://www.csir.res.in/sites/default/files/CSIR_%20201314_English.pdf

- University Grants Commission, New Delhi. Minutes of the 73rd meeting of the Empowered Committee on Basic Scientific Research, 2015 [cited 2017 May 7]. Available from: https://www.ugc.ac.in/pdfnews/0070733_Minutes-of-the-73-meeting-of-EC-Dec-22,2015.pdf

- NAAC. Institutional Accreditation Manual for Self-Study Report Universities. National Assessment and Accreditation Council, 2017 [cited 2017 May 7]. Available from: http://www.naac.gov.in/docs/RAF_University_Manual.pdf

- University Grants Commission, New Delhi. Minimum qualifications for appointment of teachers and other academic staff in universities and colleges and measures for the maintenance of standards in higher education (2nd amendment) regulations, 2013, UGC, New Delhi [cited 2017 May 7]. Available from: https://www.ugc.ac.in/pdfnews/8539300_English.pdf

- University Grants Commission, New Delhi. Notification No.F.1-2/2016(PS/Amendment), 2016 [cited 2017 May 7]. Available from: https://ugc.ac.in/pdfnews/3375714_API-4th-Amentment-Regulations-2016.pdf

- Benjamin MA, West JD, Bergstrom CT, Bergstrom T. Differences in impact factor across fields and over time. J Assoc Inf Sci Tech. 2009;60(1);27-34: doi: 10.1002/asi.20936.

- Van Noorden R. New record: 66 journals banned for boosting impact factor with self-citations Nature newsblog. 2013 Jun 19, 2013 [cited 2017 May 7]. Available from: http://blogs.nature.com/news/2013/06/new-record-66-journals-banned-for-boosting-impact-factor-with-self-citations.html

- Kiesslich T, Weineck SB, Koelblinger D. Reasons for journal impact factor changes: influence of changing source items. PLoS One. 2016 [cited 2018 Feb 8];11(4):e0154199. Available from: http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0154199

- Sheldrick GM. A short history of SHELX. Acta Cryst A. 2008;A64:112-22. doi: 10.1107/S0108767307043930

- Davis P. Gaming the impact factor puts journal in time-out. The Scholarly Kitchen October 17, 2011 [cited 2017 May 7]. Available from: https://scholarlykitchen.sspnet.org/2011/10/17/gaming-the-impact-factor-puts-journal-in-time-out/

- Chawla DS. Indian funding agency releases list of journals used to evaluate researchers. The Scientist. February 16, 2017 [cited 2017 May 31]. Available from: http://www.the-scientist.com/?articles.view/articleNo/48505/title/Indian-Funding-Agency-Releases-List-of-Journals-Used-to-Evaluate-Researchers/

- Ramani S, Prasad R. UGC’s approved journal list has 111 more predatory journals. The Hindu September 14, 2017 [cited 2017 Nov 1]. Available from: http://www.thehindu.com/sci-tech/science/ugcs-approved-journal-list-has-111-more-predatory-journals/article19677830.ece

- Pushkar. The UGC deserves applause for trying to do something about research fraud. The Wire. June 21, 2016 [cited 2017 May 7]. Available from: https://thewire.in/44343/the-ugc-deserves-applause-for-rrying-to-do-something-about-research-fraud/

- Seethapathy GS, Santhosh Kumar JU, Hareesha AS. India’s scientific publication in predatory journals: need for regulating quality of Indian science and education. Curr Sci. 2016;111(11):1759-64. doi: 10.18520/cs/v111/i11/1759-1764.

- All India Council for Technical Education. Career Advancement Scheme for Teachers and Other Academic Staff in Technical Institutions. Notification. November 8, 2012 [cited 2017 May 1]. Available from: https://www.aicte-india.org/downloads/CAS_DEGREE_291112.PDF

- Ministry of Human Resource Development, Government of India. Notification July 28, 2017 [cited 2017 May 1]. Available from: http://nitcouncil.org.in/data/pdf/nit-acts/GazetteNotification2017.pdf

- Mukunth V. Scientists in the lurch after imprecise MHRD notice about ‘paid journals’. The Wire. October 16, 2017 [cited 2017 May 1]. Available from: https://thewire.in/187601/mhrd-open-access-nit-predatory-journals-career-advancement-impact-factor/

- Medical Council of India. Minimum qualifications for teachers in medical institutions regulations, 1998 (Amended up to 8th June, 2017), 2017 [cited 2017 May 1]. Available from: https://www.mciindia.org/documents/rulesAndRegulations/Teachers-Eligibility-Qualifications-Rgulations-1998.pdf

- Aggarwal R, Gogtay N, Kumar R, Sahni P. The revised guidelines of the Medical Council of India for academic promotions: need for a rethink. Natl Med J India. 2016;29(1):e1-1-e1-5; Available from: http://archive.nmji.in/Online-Publication/01-29-1-Editorial-1.pdf

- Ravindran V, Misra DP, Negi VS. Predatory practices and how to circumvent them: a viewpoint from India. J Korean Med Sci. 2017;32(1):160-1.doi: 10.3346/jkms.2017.32.1.160.

- ICMR, Report of the Committee for revised scoring criteria for direct recruitment of scientists, April 5, 2017 [cited 2018 Feb 8]. Available from: http://icmr.nic.in/circular/2017/33.pdf

- National Academy of Agricultural Sciences, India. NAAS Score of Science Journals. 2017 [cited 2017 May 7]. Available from: http://naasindia.org/documents/journals2017.pdf

- Bishwapati M, Lyngdoh N, Challam TA. Comparative analysis of NAAS ratings of 2007 and 2010 for Indian journals. Curr Sci. 2012 [cited 2018 Feb 8];102(1):10-12. Available from: http://www.currentscience.ac.in/Volumes/102/01/0010.pdf

- Indian Council of Agricultural Research, Government of India. Revised score-card for direct selection to senior scientific positions on lateral entry basis in ICAR-Reg., November 6, 2015 [cited 2017 May 7]. Available from: http://www.icar.org.in/files/20151109192016.

- Kintisch E. Is ResearchGate Facebook for science? August 25,2014 [cited 2017 May 7]. Available from: http://www.sciencemag.org/careers/2014/08/researchgate-facebook-science

- Kraker P, Jordan K, Lex E. ResearchGate Score: good example of a bad metric. Social Science Space, December 11, 2015 [cited 2017 May 7]. Available from: https://www.socialsciencespace.com/2015/12/researchgate-score-good-example-of-a-bad-metric/

- Lawrence PA. Lost in publication: how measurement harms science. Ethics Sci Environ Polit. 2008;8:9-11. doi: 10.3354/esep00079.

- Alberts B. Impact factor distortions. Science. 2013;340(6134):787. doi: 10.1126/science.1240319

- Groen-Xu M, Teixeira P, Voigt T, Knapp B. Short-Termism in Science: Evidence from the UK Research Excellence Framework. SSRN December 6, 2017 [cited 2017 May 7]. Available from: https://ssrn.com/abstract=3083692

- San Francisco Declaration on Research Assessment, 2012 [cited 2017 May 7]. Available from: https://sfdora.org/read/

- Garfield E. How to use citation analysis for faculty evaluations, and when is it relevant? Part 1. Current Contents. 1983 [cited 2017 May 7];44:5-13. Available from: http://garfield.library.upenn.edu/essays/v6p354y1983.pdf

- Department for Business, Energy and Industrial Strategy, UK. Building on success and learning from experience: an independent review of the research excellence framework. July 2016 [cited 2017 Jul 28]. Available from: https://www.gov.uk/government/uploads/system/uploads/attachment_data/file/541338/ind-16-9-ref-stern-review.pdf

- Zare RN. Research Impact. Curr Sci. 2012;102(1):9.

- Zare RN. The obsession with being number one. Curr Sci. 2017;112(9):1796.

- National Centre for Biological Sciences. Tenure Process. Date unknown [cited 2017 May 7]. Available from: https://www.ncbs.res.in/tenure_process

- Keller L. Uncovering the biodiversity of genetic and reproductive systems: time for a more open approach. The American Naturalist. 2007;169(1):1-8. doi: 10.1086/509938

- Chavarro D, Ràfols I. Research assessments based on journal rankings systematically marginalise knowledge from certain regions and subjects. LSE Impact Blog 2017 [cited 2017 Dec 5]. Available from: http://blogs.lse.ac.uk/impactofsocialsciences/2017/10/30/research-assessments-based-on-journal-rankings-systematically-marginalise-knowledge-from-certain-regions-and-subjects/

- Martin B. What is happening to our universities? Working paper series – SWPS 2016-03 (January), University of Sussex, UK. January 2016 [cited 2017 Dec 5]. Available from: https://www.sussex.ac.uk/webteam/gateway/file.php?name=2016-03-swps-martin.pdf&site=25%3E

- Ghosh S. If Modi can demonetise, he can also revamp higher education and research, IANSlive, 2017 http://ianslive.in/index.php?param=news/If_Modi_can_demonetise_he_can_also_revamp_higher_education_and_research-539077/News/34

- Gohain MP. NAAC asked to rework accreditation process for higher education institutes. Times of India. April 10, 2017 [cited 2017 Dec 5]. Available from: https://timesofindia.indiatimes.com/home/education/news/naac-asked-to-rework-accreditation-process-for-higher-education-institutes/articleshow/58100697.cms

- Ilangovan R. Course correction. Frontline. May 17, 2013 [cited 2017 Dec 5]. Available from: http://www.frontline.in/the-nation/course-correction/article4656924.ece

- Ravi S. Restructuring the Medical Council of India to eliminate corruption. Brookings. September 19, 2017 [cited 2017 Dec 5]. Available from: https://www.brookings.edu/opinions/restructuring-the-medical-council-of-india-to-eliminate-corruption/

- Guha R. Nurturing excellence. Indian Express. January 23, 2018 [cited 2018 Feb 8]. Available from: http://indianexpress.com/article/opinion/columns/nurturing-excellence-india-education-system-universities-5035178/